Overfitting is a subject that isn’t discussed nearly enough.

In machine learning, overfitting is when an algorithm learns a model so specialized that it is unable to generalize or handle new tasks. This sounds domain-specific, but the idea can also describe many mistakes humans make in software, design, and life in general! Looking at how we deal with this problem in machine learning can help us be more systematic about avoiding it in these other arenas.

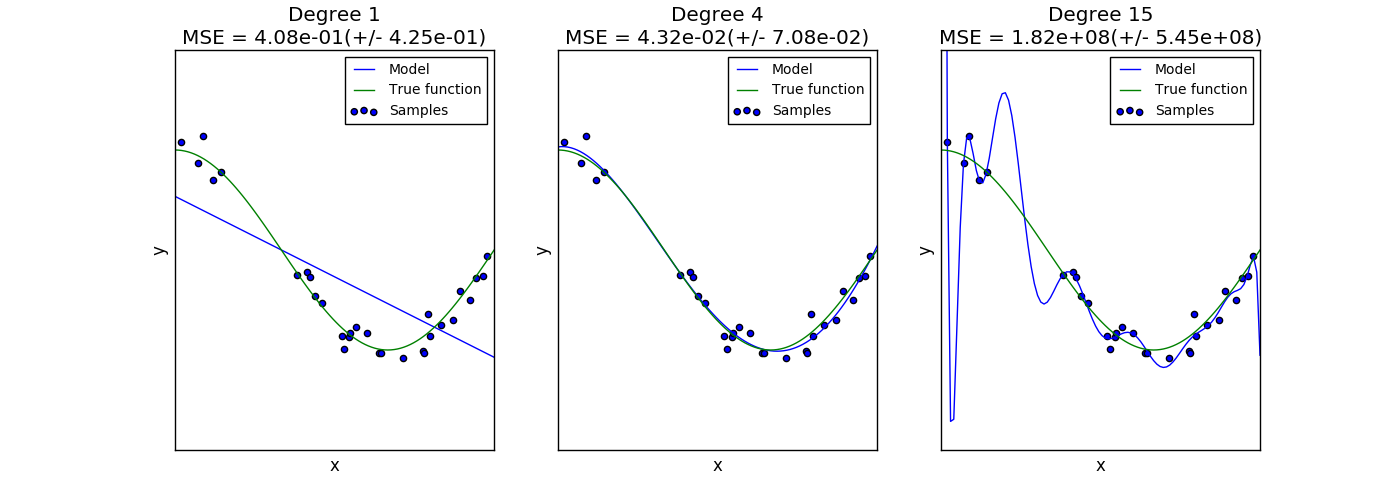

Example of under, optimal, and overfitting

Example of under, optimal, and overfitting

Before jumping into how it occurs and how we can avoid it outside ML, I’ll present a couple examples to justify the claims that this is actually a common phenomenon.

Let’s take brittle tests as an example: they occur when you test a component’s implementation instead of its interface, or when you have insufficient abstraction requiring you to bake data unrelated to the component’s behavior into the test. You know this has happened, because when you change the component’s behavior, unrelated tests break and require fixing. It’s obvious here that your tests weren’t general enough, and the need to fix them each time is a cost we’d rather avoid.

We can also see this in web design. Take for example a search results page with filters and results. It was designed assuming that the scope of the page was only to show and filter results, and became very optimized for that experience. This optimization meant the addition of charts summarizing the results would require significant design rework - much more significant than the complexity of the charts being added.

Both these examples sound like overfitting: they each specialize for specific tasks, and extra work must be done before they can be used for other, even related, tasks. These examples are fairly vague, but it’s likely we’ve all seen examples of them in the past.

How Does It Happen?

In machine learning, overfitting often happens when the problem you’re modeling has more complexity than data to learn it. If you have 1,000 data points and 2,000 features of interest, you’re very unlikely to be able to pick out what truly predicts behavior. This is called the Curse of Dimensionality, and it manifests as parts of the model that are much too specific, but still predict the dataset you are training with.

But how does it happen for software, designs, etc? The same way, actually! In all of our examples we discussed models, software or design or otherwise, becoming much too close to the specific, current problem, and requiring a lot of work to generalize to a close but new problem.

Running with the machine learning analogy, it would be fair to say that in each case the implementor didn’t have the data available to recognize what would be shortcomings in their implementation. That’s fair, but…

How Do We Prevent It?

Classically, there are two ways to prevent overfitting in machine learning: more data or less complexity. More data is self evident, but what do we mean by less complexity?

The complexity of a model can be reduced by changing the way you’re looking at the problem. For example, recent work in retention prediction showed that changing the problem definition from “Will the user return within N days?” to “How many days until the user returns?” makes the problem simpler, and allows algorithms to learn better abstractions with which to predict retention.

“The most likely hypothesis is the simplest one consistent with the data.” - Ockham’s Razor

In the same way, we can trim down the complexity of our problem to include only the highest value requirements or functionality. This is both valuable for reducing time to market, but also reduces the amount of rework necessary when important new requirements are discovered.

“More data” can be hard, but generally it means talking to more of your customers about product prototypes or mock-ups, or getting opinions from other coworkers on the potential application of a given software component.

Of course, there is always the question of whether complicated systems are merely mirroring the necessary complexity of the underlying problem, and sometimes this is the case.

More than anything, I find that simply asking myself, “Am I overfitting this problem?" is enough to give the necessary context.